For instance, someone might ideally want to read even-handed, well-researched political reporting, but she might actually be drawn to something more sensationalized like political opinion clickbait.Īlthough temptations like these have always hindered people from acting in line with their ideal preferences, recommendation algorithms may in fact be making people's struggles even worse. People often struggle to do what they ideally want as a result of this conflict between their actual and ideal preferences 6, 7.

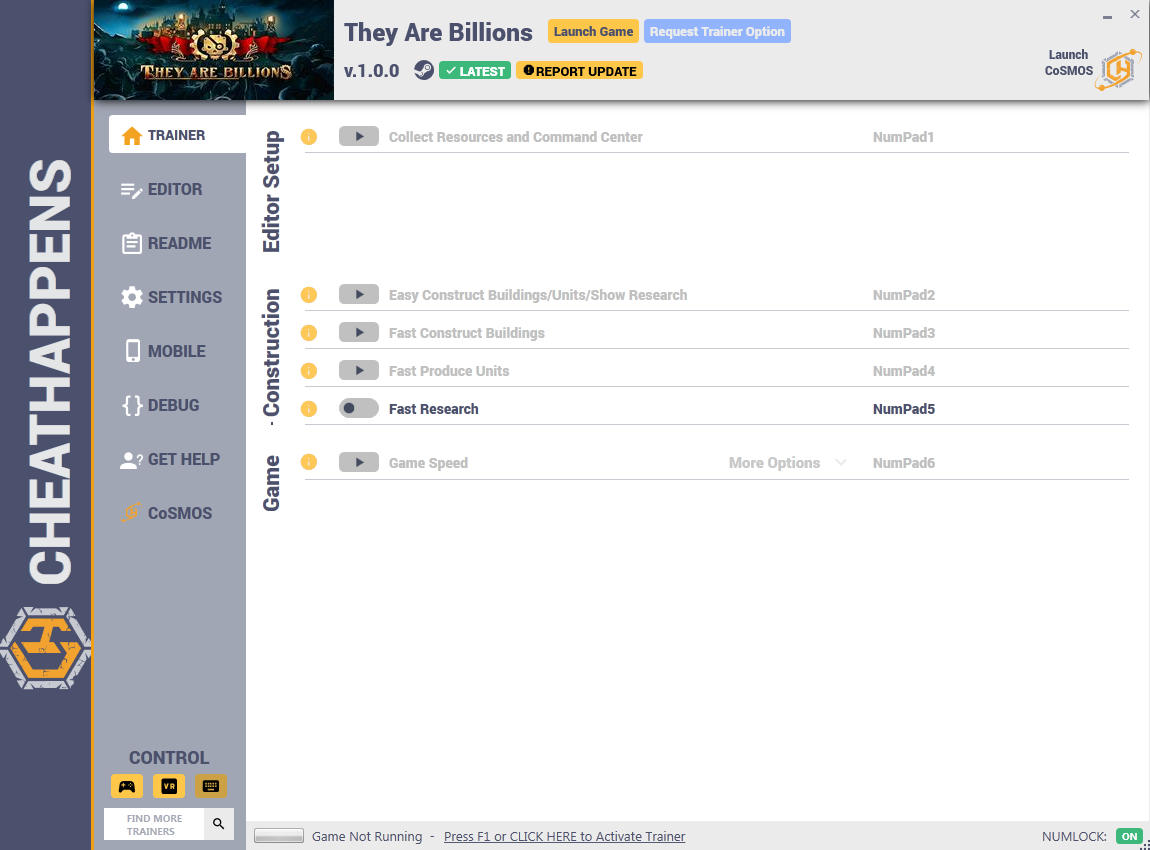

On topics as varied as social groups, politicians, policy issues, companies, behaviors, and relationship partners, people commonly hold an actual preference that differs from the ideal preference they desire to hold 4, 5, 6 (For more information about actual-ideal preference discrepancies, including why they emerge and their distinction from related constructs, see Supplementary Information). This may in part be due to the types of preferences that these algorithms target. These recommendations are tailored to user preferences, yet it has become increasingly clear that these algorithms can have harmful effects on individuals and society 1, 2, 3. Social media algorithms recommend content to billions of people every day. Our results suggest that users and companies would be better off if recommendation algorithms learned what each person was striving for and nudged individuals toward their own unique ideals. Moreover, of note to companies, targeting ideal preferences increased users' willingness to pay for the service, the extent to which they felt the company had their best interest at heart, and their likelihood of using the service again. We found that targeting ideal rather than actual preferences resulted in somewhat fewer clicks, but it also increased the extent to which people felt better off and that their time was well spent. Then, in a high-powered, pre-registered experiment ( n = 6488), we measured the effects of these recommendation algorithms. To examine this, we built algorithmic recommendation systems that generated real-time, personalized recommendations tailored to either a person’s actual or ideal preferences. actual) preferences would provide meaningful benefits to both users and companies. Here we show that tailoring recommendation algorithms to ideal (vs. By focusing on maximizing engagement, recommendation algorithms appear to be exacerbating this struggle. They Are Billions (Steam) 12-1-20 Trainer +12Ĭheats may take up to a minute to show effect in game depending on your system specs, Also some cheats have specific instructions before activating, Must read them to get the trainer working properly, Some missions require you to have structures to finish them, If you skip constructing and only count on cheats it may bug your mission.People often struggle to do what they ideally want because of a conflict between their actual and ideal preferences. They Are Billions (Steam) 11-17-20 Trainer +12 They Are Billions (Steam) 11-12-20 Trainer +12 They Are Billions (Steam) 11-12-20 Trainer +13 They Are Billions (Steam) 11-10-20 Trainer +13 They Are Billions (Steam) 7-21-20 Trainer +13 They Are Billions (Steam) 6-9-20 Trainer +13 They Are Billions (Steam) 3-31-20 Trainer +13 They Are Billions (Steam) 2-5-20 Trainer +13 They Are Billions (Steam) 8-8-19 Trainer +13 They Are Billions (Steam) 7-22-19 Trainer +8

They Are Billions (Steam) 7-11-19 Trainer +8 They Are Billions (Steam) 2-25-19 Trainer +8 They Are Billions (Steam) 2-18-19 Trainer +8 They Are Billions (Steam) 2-14-19 Trainer +8 They Are Billions (Steam) 1-18-19 Trainer +8 They Are Billions (Steam) 1-15-19 Trainer +8 They Are Billions (Steam) 1-11-19 Trainer +8

They Are Billions (Steam) 12-19-18 Trainer +8

0 kommentar(er)

0 kommentar(er)